There are plenty of great things to say about caching. There are plenty of issues to point out as well. We want to shake off the caching dust and challenge the fact that proxy caching seems to be the go-to solution to poor performance. We can do better than proxy!

Proxy caching – An oldie (but not so goldie)

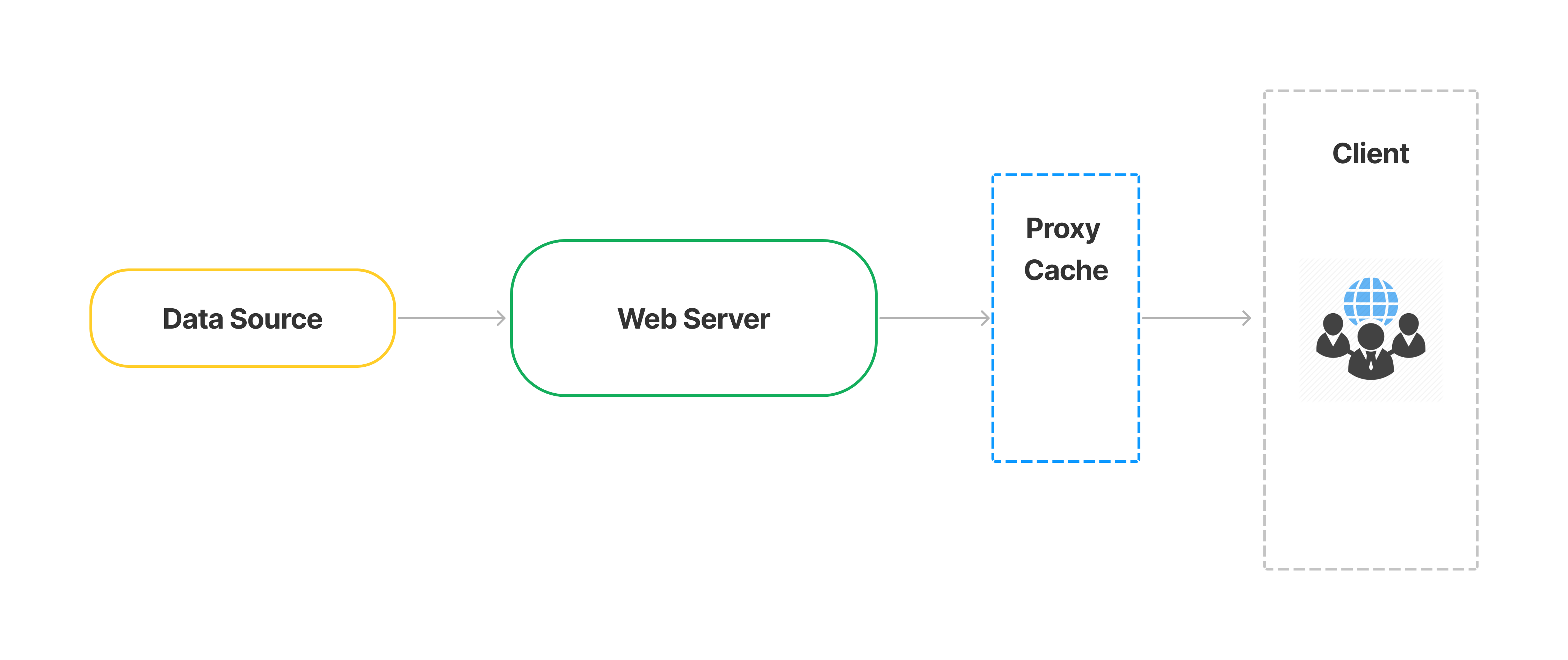

Caching. An old friend to many. Client-side proxy caching has long been the no. 1 way to solve performance issues and provide your visitors with a speedy frontend experience. (Well, unless you’re the first visitor on the page, of course…)

The traditional proxy cache, however, is strained by two main challenges that you need to solve:

👉 Cache hydration

👉 Cache invalidation

What is cache hydration?

In a traditional proxy cache, the first website visitor of any page often suffers a performance hit.

Basically, every visit to a webpage inquires if there’s a copy in the cache – and calls it. If there isn’t, the web server needs to connect to the database and generate the page and save it in the cache. That takes time, thus hurting the first visitor’s time to live.

The second visitor – and any subsequent visitor – then reaps the benefits and gets a faster response time. But that doesn’t really help the first visitor one whit.

What is cache invalidation?

The second challenge occurs when the cache needs to refresh. That’s what computer scientists typically refer to as cache invalidation.

Often this is solved by configuring how long a page should be available in the cache before the page should be removed. Again, a random visitor will suffer a performance hit. So, from a performance perspective a long-lived cache is the ideal. But at some point, the website editors will publish a new version of a page – and they will most definitely expect the visitors to be able to read the new version.

Cache invalidation using a specific time is therefore a compromise between performance and time between updates.

But no one really likes a compromise…

Next generation caching – let’s do better

Frankly, it’s time to do better. Stop sacrificing that first visitor. Stop the conflict between new content and high performance.

Not to toot our own horn (all right, probably a little bit…) but Enterspeed solves the issues without making compromises.

The new (and better) approach

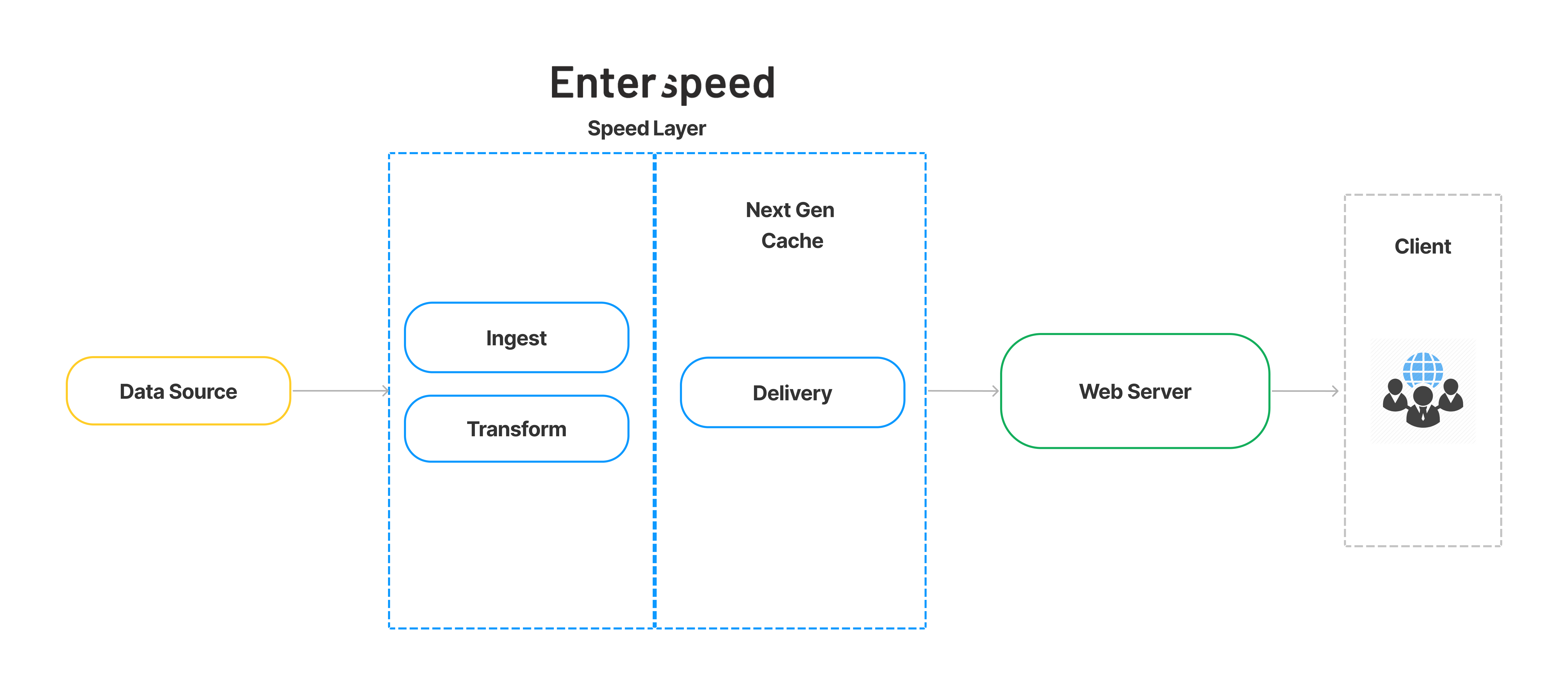

API caching is an alternative to the client-side proxy HTML cache. By caching at API level, you don’t have to deal with cache hydration or invalidation. It’s also the strategy that we subscribe to.

Essentially, we optimise the API instead – meaning that we focus on much more efficient backend resources.

How do we manage to eliminate issues that’s been deemed a necessary evil for so long? Well, basically we do three things: First we ingest data from any – and multiple – data sources to Enterspeed. Then we transform and store the data, so it’s ready for the frontend. And lastly, we push the data very speedily to the frontend.

Every time someone makes changes to the data, we ingest, transform, and push the new data. Voila!

Because the delivery data in Enterspeed is always updated with the newest version of the data send to Enterspeed, you don’t need to cache hydrate through a proxy cache.

We do the heavy lifting of preparing the data as soon the editors publish the pages, so the newest version of the data is always available in all our Delivery API’s delivery regions.

No one needs to tolerate any performance hit. The data is served blazingly fast to everybody – including the first page visitor.

In short:

👉 There is no TTL (time to live)

👉 There is no hydration cost (first heavy load)

👉 We reduce the TTFB (time to first byte) on the delivery API

This also means that we can push the finished data to multiple regions simultaneously. Normally, you’ll need to cache hydrate in every region, which effectively leaves several visitors to pay the price of poor performance – but that’s another example of an intolerable compromise. We won’t have it. Period.

Enterspeed is (also) a cache. But we’re not a proxy cache.

We are a Next Generation Cache 👏🕺

20 years of experience with web technology and software engineering. Loves candy, cake, and coaching soccer.